Is there a tool for Apache comparable to Microsoft's II LogParser?

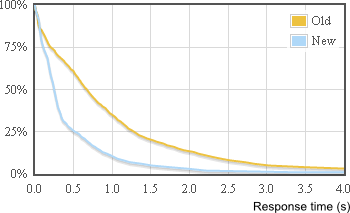

How can I get this graph:

(from Speeding up StackExchange)

It shows what percentage of web requests took longer than N seconds. I have IIS logs and LogParser installed but not sure how to proceed.

Looking for examples of integrating LP into this workflow:

- A SQL server event indicates IIS tossed an error.

- SQL parses the body of the error message and generates an LP commandline that queries IIS logs to collect more information.

- An email is dispatched to tech support with a link to an html (or .aspx) page that would run and present LP's output.

So i think the question boils down to:

How do i hook LP upto IIS7?

EDIT: Ok...re-boiling the question. When an IIS exception is triggered I want to see all the other log rows where the session cookie is the same as the session cookie found in the error event. Yes, I could live with polling IIS logs via a scheduled task - that takes ELMAH/SQL out of the equation.

But now the question becomes: Find all log rows where session cookie = the session cookie found in a row that had an error

I'm working on a public website that is used by both external visitors and internal employees. I'm after the external visitor hits, but I can't think of a good way to filter out the internal IP ranges.

Using LogParser, what is the best way to filter IISW3C logs by IP range?

This is all I've come up with so far, which can't possibly be the best or most efficient way.

WHERE [c-ip] NOT LIKE (10.10.%, 10.11.%)

Any help is appreciated.

As Stack Overflow grows, we're starting to look closely at our IIS logs to identify problem HTTP clients -- things like rogue web spiders, users who have a large page set to refresh every second, poorly written one-off web scrapers, tricksy users who try to increment page count a zillion times, and so forth.

I've come up with a few LogParser queries that help us identify most of the oddities and abnormalities when pointed at an IIS log file.

Top bandwidth usage by URL

SELECT top 50 DISTINCT

SUBSTR(TO_LOWERCASE(cs-uri-stem), 0, 55) AS Url,

Count(*) AS Hits,

AVG(sc-bytes) AS AvgBytes,

SUM(sc-bytes) as ServedBytes

FROM {filename}

GROUP BY Url

HAVING Hits >= 20

ORDER BY ServedBytes DESC

url hits avgbyte served ------------------------------------------------- ----- ------- ------- /favicon.ico 16774 522 8756028 /content/img/search.png 15342 446 6842532

Top hits by URL

SELECT TOP 100

cs-uri-stem as Url,

COUNT(cs-uri-stem) AS Hits

FROM {filename}

GROUP BY cs-uri-stem

ORDER BY COUNT(cs-uri-stem) DESC

url hits ------------------------------------------------- ----- /content/img/sf/vote-arrow-down.png 14076 /content/img/sf/vote-arrow-up.png 14018

Top bandwidth and hits by IP / User-Agent

SELECT TOP 30

c-ip as Client,

SUBSTR(cs(User-Agent), 0, 70) as Agent,

Sum(sc-bytes) AS TotalBytes,

Count(*) as Hits

FROM {filename}

group by c-ip, cs(User-Agent)

ORDER BY TotalBytes desc

client user-agent totbytes hits ------------- --------------------------------------------- --------- ----- 66.249.68.47 Mozilla/5.0+(compatible;+Googlebot/2.1; 135131089 16640 194.90.190.41 omgilibot/0.3++omgili.com 133805857 6447

Top bandwidth by hour by IP / User-Agent

SELECT TOP 30

TO_STRING(time, 'h') as Hour,

c-ip as Client,

SUBSTR(cs(User-Agent), 0, 70) as Agent,

Sum(sc-bytes) AS TotalBytes,

count(*) as Hits

FROM {filename}

group by c-ip, cs(User-Agent), hour

ORDER BY sum(sc-bytes) desc

hr client user-agent totbytes hits -- ------------- ----------------------------------------- -------- ---- 9 194.90.190.41 omgilibot/0.3++omgili.com 30634860 1549 10 194.90.190.41 omgilibot/0.3++omgili.com 29070370 1503

Top hits by hour by IP / User-Agent

SELECT TOP 30

TO_STRING(time, 'h') as Hour,

c-ip as Client,

SUBSTR(cs(User-Agent), 0, 70) as Agent,

count(*) as Hits,

Sum(sc-bytes) AS TotalBytes

FROM {filename}

group by c-ip, cs(User-Agent), hour

ORDER BY Hits desc

hr client user-agent hits totbytes -- ------------- ----------------------------------------- ---- -------- 10 194.90.190.41 omgilibot/0.3++omgili.com 1503 29070370 12 66.249.68.47 Mozilla/5.0+(compatible;+Googlebot/2.1 1363 13186302

The {filename} of course would be a path to an IIS logfile, such as

c:\working\sologs\u_ex090708.log

I did a lot of web searches for good IIS LogParser queries and found precious little. These 5, above, have helped us tremendously in identifying serious problem clients. But I'm wondering -- what are we missing?

What other ways are there to slice and dice the IIS logs (preferably with LogParser queries) to mine them for statistical anomalies? Do you have any good IIS LogParser queries you run on your servers?